Last spring, physicians like us were confused. Covid-19 was just starting its deadly journey around the world, afflicting our patients with severe lung infections, strokes, skin rashes, debilitating fatigue, and numerous other acute and chronic symptoms. Armed with outdated clinical intuitions, we were left disoriented by a disease shrouded in ambiguity.

In the midst of the uncertainty, Epic, a private electronic health record giant and a key purveyor of American health data, accelerated the deployment of a clinical prediction tool called the Deterioration Index. Built with a type of artificial intelligence called machine learning and in use at some hospitals prior to the pandemic, the index is designed to help physicians decide when to move a patient into or out of intensive care, and is influenced by factors like breathing rate and blood potassium level. Epic had been tinkering with the index for years but expanded its use during the pandemic. At hundreds of hospitals, including those in which we both work, a Deterioration Index score is prominently displayed on the chart of every patient admitted to the hospital.

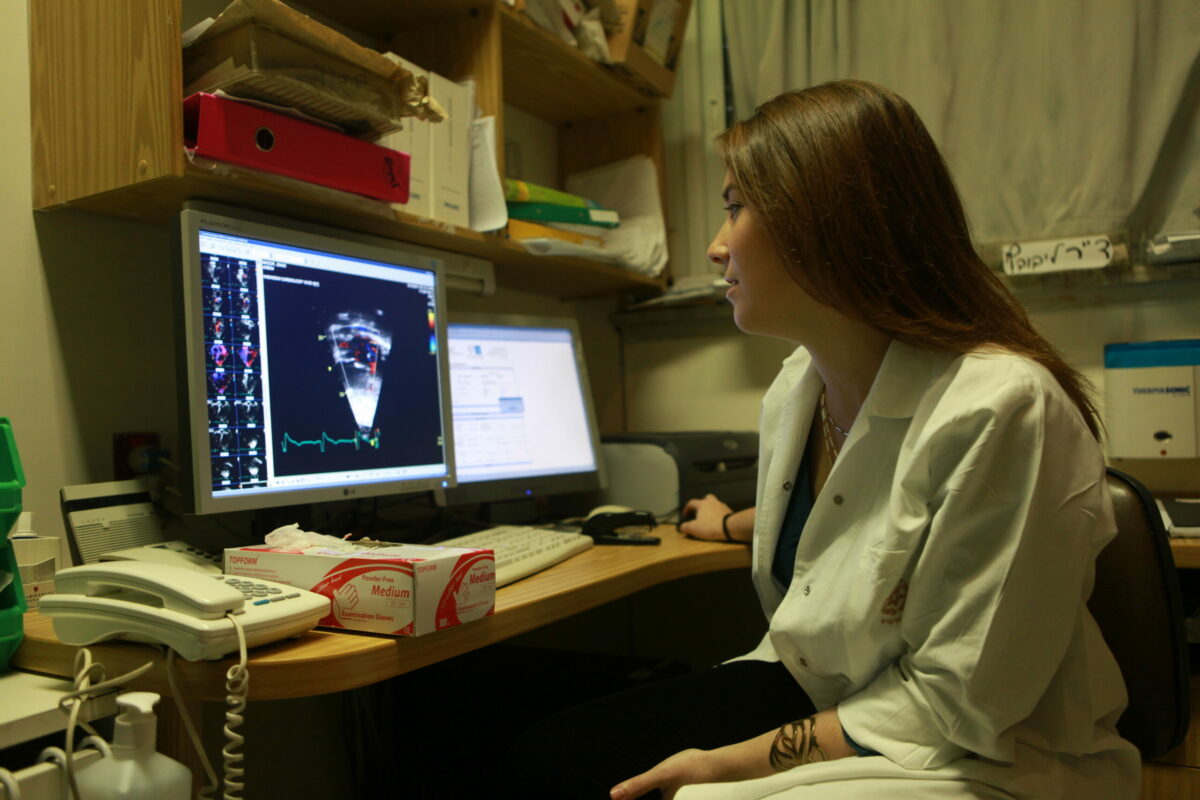

The Deterioration Index is poised to upend a key cultural practice in medicine: triage. Loosely speaking, triage is an act of determining how sick a patient is at any given moment to prioritize treatment and limited resources. In the past, physicians have performed this task by rapidly interpreting a patient’s vital signs, physical exam findings, test results, and other data points, using heuristics learned through years of on-the-job medical training.

Ostensibly, the core assumption of the Deterioration Index is that traditional triage can be augmented, or perhaps replaced entirely, by machine learning and big data. Indeed, a study of 392 Covid-19 patients admitted to Michigan Medicine that the index was moderately successful at discriminating between low-risk patients and those who were at high-risk of being transferred to an ICU, getting placed on a ventilator, or dying while admitted to the hospital. But last year’s hurried rollout of the Deterioration Index also sets a worrisome precedent, and it illustrates the potential for such decision-support tools to propagate biases in medicine and change the ways in which doctors think about their patients.

The use of algorithms to support clinical decision making isn’t new. But historically, these tools have been put into use only after a rigorous peer review of the raw data and statistical analyses used to develop them. Epic’s Deterioration Index, on the other hand, remains proprietary despite its widespread deployment. Although physicians are provided with a list of the variables used to calculate the index and a rough estimate of each variable’s impact on the score, we aren’t allowed under the hood to evaluate the raw data and calculations.

Furthermore, the Deterioration Index was not independently validated or peer-reviewed before the tool was rapidly deployed to America’s largest health care systems. Even now, there have been, to our knowledge, only two peer-reviewed published studies of the index. The deployment of a largely untested proprietary algorithm into clinical practice — with minimal understanding of the potential unintended consequences for patients or clinicians — raises a host of issues.

It remains unclear, for instance, what biases may be encoded into the index. Medicine already has a fraught history with race and gender disparities and biases. Studies have shown that, among other injustices, physicians underestimate the pain of minority patients and are less likely to refer women to total knee replacement surgery when it is warranted. Some clinical scores, including calculations commonly used to assess kidney and lung function, have traditionally been adjusted based on a patient’s race — a practice that many in the medical community now oppose. Without direct access to the equations underlying Epic’s Deterioration Index, or further external inquiry, it is impossible to know whether the index incorporates such race-adjusted scores in its own algorithm, potentially propagating biases.

Introducing machine learning into the triage process could fundamentally alter the way we teach medicine. It has the potential to improve inpatient care by highlighting new links between clinical data and outcomes — links that might otherwise have gone unnoticed. But it could also over-sensitize young physicians to the specific tests and health factors that the algorithm deems important; it could compromise trainees’ ability to hone their own clinical intuition. In essence, physicians in training would be learning medicine on Epic’s terms.

Thankfully, there are safeguards that can be relatively painlessly put in place. In 2015, the international Equator Network created a 22-point Tripod checklist to guide the responsible development, validation, and improvement of clinical prediction tools like the Deterioration Index. For example, it asks tool developers to provide details on how risk groups were created, report performance measures with confidence intervals, and discuss limitations of validation studies. Private health data brokers like Epic should always be held to this standard.

Now that its Deterioration Index is already being used in clinical settings, Epic should immediately release for peer review the underlying equations and the anonymized datasets it used for its internal validation, so that doctors and health services researchers can better understand any potential implications they may have for health equity. There need to be clear communication channels to raise, discuss, and resolve any issues that emerge in peer review, including concerns about the score’s validity, prognostic value, bias, or unintended consequences. Companies like Epic should also engage more deliberately and openly with the physicians who use their algorithms; they should share information about the populations on which the algorithms were trained, the questions the algorithms are best equipped to answer, and the flaws the algorithms may carry. Caveats and warnings should be communicated clearly and quickly to all clinicians who use the indices.

The Covid-19 pandemic, having accelerated the widespread deployment of clinical prediction tools like the Deterioration Index, may herald a new coexistence between physicians and machines in the art of medicine. Now is the time to set the ground rules to ensure that this partnership helps us change medicine for the better, and not the worse.